Computer

IBM

Image via Wikipedia

Image via Wikipedia

IBM

Once upon a time, the computer company most hackers loved to hate; today, the one they are most puzzled to find themselves liking.From hackerdom's beginnings in the mid-1960s to the early 1990s, IBM was regarded with active loathing. Common expansions of the corporate name included: Inferior But Marketable; It's Better Manually; Insidious Black Magic; It's Been Malfunctioning; Incontinent Bowel Movement; and a near-{infinite} number of even less complimentary expansions (see also {fear and loathing}). What galled hackers about most IBM machines above the PC level wasn't so much that they were underpowered and overpriced (though that counted against them), but that the designs were incredibly archaic, {crufty}, and {elephantine} ... and you couldn't fix them -- source code was locked up tight, and programming tools were expensive, hard to find, and bletcherous to use once you had found them.

We didn't know how good we had it back then. In the 1980s IBM had its own troubles with Microsoft and lost its strategic way, receding from the hacker community's view. Then, in the 1990s, Microsoft became more noxious and omnipresent than IBM had ever been.

In the late 1990s IBM re-invented itself as a services company, began to release open-source software through its AlphaWorks group, and began shipping {Linux} systems and building ties to the Linux community. To the astonishment of all parties, IBM emerged as a staunch friend of the hacker community and {open source} development, with ironic consequences noted in the {FUD} entry.

This lexicon includes a number of entries attributed to `IBM'; these derive from some rampantly unofficial jargon lists circulated within IBM's formerly beleaguered hacker underground.

Related articles by Zemanta

- Ibm (underpop.online.fr)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=07be925d-deb2-45f5-8527-fd7a6968f5a1)

Marcadores: Code, Common, Computer, Developer, GNU, IBM, Linux, Software, Source

# 5/31/2009 01:31:00 AM, Comentários, Links para esta postagem,

wetware

Image via Wikipedia

Image via Wikipedia

wetware

[prob.: from the novels of Rudy Rucker]- The human nervous system, as opposed to computer hardware or software. "Wetware has 7 plus or minus 2 temporary registers."

- Human beings (programmers, operators, administrators) attached to a computer system, as opposed to the system's hardware or software. See {liveware}, {meatware}.

Related articles by Zemanta

- wetware (underpop.online.fr)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=0891e44f-805f-4701-8141-b82744ff00f7)

Marcadores: Business, Computer, Hardware, Retailers, Software, Technology, WetWare

# 5/25/2009 02:31:00 PM, Comentários, Links para esta postagem,

trap

trap

- n. A program interrupt, usually an interrupt caused by some exceptional situation in the user program. In most cases, the OS performs some action, then returns control to the program.

- vi. To cause a trap. "These instructions trap to the monitor." Also used transitively to indicate the cause of the trap. "The monitor traps all input/output instructions."

This term is associated with assembler programming (interrupt or exception is more common among {HLL} programmers) and appears to be fading into history among programmers as the role of assembler continues to shrink. However, it is still important to computer architects and systems hackers (see {system}, sense 1), who use it to distinguish deterministically repeatable exceptions from timing-dependent ones (such as I/O interrupts).

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_a.png?x-id=925aea16-b52e-448d-90ee-b960ef309fa6)

Marcadores: Assembly, Common, Computer, Languages, Programming, Trap

# 5/23/2009 12:01:00 AM, Comentários, Links para esta postagem,

virtual

virtual

[via the technical term virtual memory, prob.: from the term virtual image in optics]

- Common alternative to {logical}; often used to refer to the artificial objects (like addressable virtual memory larger than physical memory) simulated by a computer system as a convenient way to manage access to shared resources.

- Simulated; performing the functions of something that isn't really there. An imaginative child's doll may be a virtual playmate. Oppose {real}.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_a.png?x-id=aa3c5088-b541-443a-9cda-38b58191bf9a)

Marcadores: Common, Components, Computer, Hardware, Memory, Optics

# 5/20/2009 01:30:00 AM, Comentários, Links para esta postagem,

SEX

SEX

[Sun Users' Group & elsewhere] n.

- Software EXchange. A technique invented by the blue-green algae hundreds of millions of years ago to speed up their evolution, which had been terribly slow up until then. Today, SEX parties are popular among hackers and others (of course, these are no longer limited to exchanges of genetic software). In general, SEX parties are a {Good Thing}, but unprotected SEX can propagate a {virus}. See also {pubic directory}.

- The rather Freudian mnemonic often used for Sign EXtend, a machine instruction found in the {PDP-11} and many other architectures. The RCA 1802 chip used in the early Elf and SuperElf personal computers had a `SEt X register' SEX instruction, but this seems to have had little folkloric impact. The Data General, instruction set also had SEX.

{DEC}'s engineers nearly got a {PDP-11} assembler that used the SEX mnemonic out the door at one time, but (for once) marketing wasn't asleep and forced a change. That wasn't the last time this happened, either. The author of The Intel 8086 Primer, who was one of the original designers of the 8086, noted that there was originally a SEX instruction on that processor, too. He says that Intel management got cold feet and decreed that it be changed, and thus the instruction was renamed CBW and CWD (depending on what was being extended). Amusingly, the Intel 8048 (the microcontroller used in IBM, PC, keyboards) is also missing straight SEX but has logical-or and logical-and instructions ORL and ANL.

The Motorola 6809, used in the Radio Shack Color Computer and in U.K.'s `Dragon 32' personal computer, actually had an official SEX instruction; the 6502 in the Apple II with which it competed did not. British hackers thought this made perfect mythic sense; after all, it was commonly observed, you could (on some theoretical level) have sex with a dragon, but you can't have sex with an apple.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_a.png?x-id=b8e13f1a-0381-40e3-976e-2bfa15f3583c)

Marcadores: apple, Computer, Hardware, IBM, PDP-11, Software, Virus

# 5/19/2009 06:31:00 PM, Comentários, Links para esta postagem,

Turing tar-pit

Turing tar-pit

- A place where anything is possible but nothing of interest is practical. Alan Turing helped lay the foundations of computer science by showing that all machines and languages capable of expressing a certain very primitive set of operations are logically equivalent in the kinds of computations they can carry out, and in principle have capabilities that differ only in speed from those of the most powerful and elegantly designed computers. However, no machine or language exactly matching Turing's primitive set has ever been built (other than possibly as a classroom exercise), because it would be horribly slow and far too painful to use. A Turing tar-pit is any computer language or other tool that shares this property. That is, it's theoretically universal -- but in practice, the harder you struggle to get any real work done, the deeper its inadequacies suck you in. Compare {bondage-and-discipline language}.

- The perennial {holy wars} over whether language A or B is the "most powerful".

Marcadores: Computer, Languages

# 5/19/2009 04:31:00 PM, Comentários, Links para esta postagem,

bboard

Image by Getty Images via Daylife

Image by Getty Images via Daylife

bboard

[contraction of `bulletin board']- Any electronic bulletin board; esp. used of {BBS} systems running on personal micros, less frequently of a Usenet {newsgroup} (in fact, use of this term for a newsgroup generally marks one either as a {newbie} fresh in from the BBS world or as a real old-timer predating Usenet).

- At CMU and other colleges with similar facilities, refers to campus-wide electronic bulletin boards.

- The term physical bboard is sometimes used to refer to an old-fashioned, non-electronic cork-and-thumbtack memo board. At CMU, it refers to a particular one outside the CS Lounge.

In either of senses 1 or 2, the term is usually prefixed by the name of the intended board (`the Moonlight Casino bboard' or `market bboard'); however, if the context is clear, the better-read bboards may be referred to by name alone, as in (at CMU) "Don't post for-sale ads on general".

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=bcdd9db9-e186-4818-be11-688c7439f70d)

Marcadores: BBS, Computer, FAQ, Newbie, Newsgroup, Newsgroups, Usenet

# 5/19/2009 10:31:00 AM, Comentários, Links para esta postagem,

cracker

cracker

One who breaks security on a system. Coined ca. 1985 by hackers in defense against journalistic misuse of {hacker} (q.v., sense 8). An earlier attempt to establish worm in this sense around 1981--82 on Usenet was largely a failure.

Use of both these neologisms reflects a strong revulsion against the theft and vandalism perpetrated by cracking rings. The neologism "cracker" in this sense may have been influenced not so much by the term "safe-cracker" as by the non-jargon term "cracker", which in Middle English meant an obnoxious person (e.g., "What cracker is this same that deafs our ears / With this abundance of superfluous breath?" -- Shakespeare's King John, Act II, Scene I) and in modern colloquial American English survives as a barely gentler synonym for "white trash".

While it is expected that any real hacker will have done some playful cracking and knows many of the basic techniques, anyone past {larval stage} is expected to have outgrown the desire to do so except for immediate, benign, practical reasons (for example, if it's necessary to get around some security in order to get some work done).

Thus, there is far less overlap between hackerdom and crackerdom than the {mundane} reader misled by sensationalistic journalism might expect. Crackers tend to gather in small, tight-knit, very secretive groups that have little overlap with the huge, open poly-culture this lexicon describes; though crackers often like to describe themselves as hackers, most true hackers consider them a separate and lower form of life. An easy way for outsiders to spot the difference is that crackers use grandiose screen names that conceal their identities. Hackers never do this; they only rarely use noms de guerre at all, and when they do it is for display rather than concealment.

Ethical considerations aside, hackers figure that anyone who can't imagine a more interesting way to play with their computers than breaking into someone else's has to be pretty {losing}. Some other reasons crackers are looked down on are discussed in the entries on {cracking} and {phreaking}. See also {samurai}, {dark-side hacker}, and {hacker ethic}. For a portrait of the typical teenage cracker, see {warez d00dz}.

Marcadores: Computer, English, Newsgroups, Security, Usenet

# 5/18/2009 11:31:00 PM, Comentários, Links para esta postagem,

dike

Image via Wikipedia

Image via Wikipedia

dike

To remove or disable a portion of something, as a wire from a computer or a subroutine from a program. A standard slogan is "When in doubt, dike it out". (The implication is that it is usually more effective to attack software problems by reducing complexity than by increasing it.) The word `dikes' is widely used to mean `diagonal cutters', a kind of wire cutter. To `dike something out' means to use such cutters to remove something. Indeed, the TMRC Dictionary defined dike as "to attack with dikes". Among hackers this term has been metaphorically extended to informational objects such as sections of code.![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=9b6fece5-e00e-42e7-9ccd-fbb97137d0e7)

Marcadores: Code, Computer, Software

# 5/18/2009 07:31:00 PM, Comentários, Links para esta postagem,

fence

fence

n.

- A sequence of one or more distinguished ({out-of-band}) characters (or other data items), used to delimit a piece of data intended to be treated as a unit (the computer-science literature calls this a sentinel). The NUL (ASCII 0000000) character that terminates strings in C is a fence. Hex FF is also (though slightly less frequently) used this way. See {zigamorph}.

- An extra data value inserted in an array or other data structure in order to allow some normal test on the array's contents also to function as a termination test. For example, a highly optimized routine for finding a value in an array might artificially place a copy of the value to be searched for after the last slot of the array, thus allowing the main search loop to search for the value without having to check at each pass whether the end of the array had been reached.

- [among users of optimizing compilers] Any technique, usually exploiting knowledge about the compiler, that blocks certain optimizations. Used when explicit mechanisms are not available or are overkill. Typically a hack: "I call a dummy procedure there to force a flush of the optimizer's register-coloring info" can be expressed by the shorter "That's a fence procedure".

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=1494c55b-4254-44a1-a412-74f2d04c389b)

Marcadores: ASCII, Compiler, Computer, Languages, Programming

# 5/18/2009 02:31:00 PM, Comentários, Links para esta postagem,

megapenny

megapenny

$10,000 (1 cent * 10^6). Used semi-humorously as a unit in comparing computer cost and performance figures.Marcadores: Computer

# 5/17/2009 07:31:00 PM, Comentários, Links para esta postagem,

milliLampson

milliLampson

A unit of talking speed, abbreviated mL. Most people run about 200 milliLampsons. The eponymous Butler Lampson (a CS theorist and systems implementor highly regarded among hackers) goes at 1000. A few people speak faster. This unit is sometimes used to compare the (sometimes widely disparate) rates at which people can generate ideas and actually emit them in speech. For example, noted computer architect C. Gordon Bell (designer of the {PDP-11}) is said, with some awe, to think at about 1200 mL but only talk at about 300; he is frequently reduced to fragments of sentences as his mouth tries to keep up with his speeding brain.

Marcadores: Abbreviation, Computer

# 5/17/2009 06:31:00 PM, Comentários, Links para esta postagem,

spod

Image by Craig Anderson via Flickr

Image by Craig Anderson via Flickr

spod:

[UK]- A lower form of life found on {talker system}s and {MUD}s. The spod has few friends in {RL} and uses talkers instead, finding communication easier and preferable over the net. He has all the negative traits of the computer geek without having any interest in computers per se. Lacking any knowledge of or interest in how networks work, and considering his access a God-given right, he is a major irritant to sysadmins, clogging up lines in order to reach new MUDs, following passed-on instructions on how to sneak his way onto Internet ("Wow! It's in America!") and complaining when he is not allowed to use busy routes. A true spod will start any conversation with "Are you male or female?" (and follow it up with "Got any good numbers/IDs/passwords?") and will not talk to someone physically present in the same terminal room until they log onto the same machine that he is using and enter talk mode. 2. An experienced talker user. As with the defiant adoption of the term geek in the mid-1990s by people who would previously have been stigmatized by it, the term "spod" is now used as a mark of distinction by talker users who've accumulated a large amount of login time. Such spods tend to be very knowledgeable about talkers and talker coding, as well as more general hacker activites. An unusually high proportion of spods work in the ISP sector, a profession which allows for lengthy periods of login time and for under-the-desk servers, or "spodhosts", upon which talker systems are hosted. Compare {newbie}, {tourist}, {weenie}, {twink}, {terminal junkie}, {warez d00dz}.

- A {backronym} for "Sole Purpose, Obtain a Degree"; according to some self-described spods, this term is used by indifferent students to condemn their harder-working fellows.

- [Glasgow University] An otherwise competent hacker who spends way too much time on talker systems.

- [obs.] An ordinary person; a {random}. This is the meaning with which the term was coined, but the inventor informs us he has himself accepted sense 1.

Related articles by Zemanta

- spod (underpop.online.fr)

- warez d00dz (underpop.online.fr)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=cab82281-dc0e-4e7d-ba3b-12afd8691ab5)

Marcadores: 1990s, Chat, Computer, Internet, Leet, MUD, Server, Spod, Talkers

# 5/16/2009 09:31:00 PM, Comentários, Links para esta postagem,

virus

Image by Hector Aiza via Flickr

Image by Hector Aiza via Flickr

virus:

[from the obvious analogy with biological viruses, via SF] A cracker program that searches out other programs and `infects' them by embedding a copy of itself in them, so that they become {Trojan horse}s. When these programs are executed, the embedded virus is executed too, thus propagating the `infection'. This normally happens invisibly to the user. Unlike a {worm}, a virus cannot infect other computers without assistance. It is propagated by vectors such as humans trading programs with their friends (see {SEX}). The virus may do nothing but propagate itself and then allow the program to run normally. Usually, however, after propagating silently for a while, it starts doing things like writing cute messages on the terminal or playing strange tricks with the display (some viruses include nice {display hack}s). Many nasty viruses, written by particularly perversely minded {cracker}s, do irreversible damage, like nuking all the user's files.In the 1990s, viruses became a serious problem, especially among Windows users; the lack of security on these machines enables viruses to spread easily, even infecting the operating system (Unix machines, by contrast, are immune to such attacks). The production of special anti-virus software has become an industry, and a number of exaggerated media reports have caused outbreaks of near hysteria among users; many {luser}s tend to blame everything that doesn't work as they had expected on virus attacks. Accordingly, this sense of virus has passed not only into techspeak but into also popular usage (where it is often incorrectly used to denote a {worm} or even a {Trojan horse}). See {phage}; compare {back door}; see also {Unix conspiracy}.

Related articles by Zemanta

- virus (underpop.online.fr)

- Antivirus software (en.wikipedia.org)

- What Anti-Virus Software Consists Of (yearn2blog.com)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=71fc2f47-c2a5-4022-b4d8-96447227cc97)

Marcadores: AntiVirus, Computer, Infection, Microsoft, Security, Software, Virus, Windows

# 5/16/2009 12:31:00 PM, Comentários, Links para esta postagem,

wugga wugga

wugga wugga

Imaginary sound that a computer program makes as it labors with a tedious or difficult task.{grind} (sense 4).# 5/16/2009 08:31:00 AM, Comentários, Links para esta postagem,

ELIZA effect

ELIZA effect

[AI community] The tendency of humans to attach associations to terms from prior experience. For example, there is nothing magic about the symbol + that makes it well-suited to indicate addition; it's just that people associate it with addition. Using + or `plus' to mean addition in a computer language is taking advantage of the ELIZA effect.

This term comes from the famous ELIZA program by Joseph Weizenbaum, which simulated a Rogerian psychotherapist by rephrasing many of the patient's statements as questions and posing them to the patient. It worked by simple pattern recognition and substitution of key words into canned phrases. It was so convincing, however, that there are many anecdotes about people becoming very emotionally caught up in dealing with ELIZA. All this was due to people's tendency to attach to words meanings which the computer never put there. The ELIZA effect is a {Good Thing} when writing a programming language, but it can blind you to serious shortcomings when analyzing an Artificial Intelligence system. Compare {ad-hockery}; see also {AI-complete}. Sources for a clone of the original Eliza are available at ftp://ftp.cc.utexas.edu/pub/AI_ATTIC/Programs/Classic/Eliza/Eliza.c.

Marcadores: Computer, Languages, Source

# 5/16/2009 01:31:00 AM, Comentários, Links para esta postagem,

SIG

SIG

(also common as a prefix in combining forms) A Special Interest Group, in one of several technical areas, sponsored by the Association for Computing Machinery; well-known ones include SIGPLAN (the Special Interest Group on Programming Languages), SIGARCH (the Special Interest Group for Computer Architecture) and SIGGRAPH (the Special Interest Group for Computer Graphics). Hackers, not surprisingly, like to overextend this naming convention to less formal associations like SIGBEER (at ACM conferences) and SIGFOOD (at University of Illinois).

Marcadores: Common, Computer, Languages

# 5/15/2009 02:31:00 PM, Comentários, Links para esta postagem,

bandwidth

Image via Wikipedia

Image via Wikipedia

bandwidth:

- [common] Used by hackers (in a generalization of its technical meaning) as the volume of information per unit time that a computer, person, or transmission medium can handle. "Those are amazing graphics, but I missed some of the detail -- not enough bandwidth, I guess." Compare {low-bandwidth}; see also {brainwidth}. This generalized usage began to go mainstream after the Internet, population explosion of 1993-1994.

- Attention span.

- On {Usenet}, a measure of network capacity that is often wasted by people complaining about how items posted by others are a waste of bandwidth.

Related articles by Zemanta

- bandwidth (underpop.online.fr)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=aa0189fb-349a-44ce-9a34-aad6ff75f48c)

Marcadores: Bandwidth, Common, Computer, Information, Internet, Newsgroups, Usenet, Volume

# 5/15/2009 07:31:00 AM, Comentários, Links para esta postagem,

bit

bit:

[from the mainstream meaning and "Binary digIT"]- [techspeak] The unit of information; the amount of information obtained from knowing the answer to a yes-or-no question for which the two outcomes are equally probable.

- [techspeak] A computational quantity that can take on one of two values, such as true and false or 0 and 1.

- A mental flag: a reminder that something should be done eventually. "I have a bit set for you." (I haven't seen you for a while, and I'm supposed to tell or ask you something.)

- More generally, a (possibly incorrect) mental state of belief. "I have a bit set that says that you were the last guy to hack on EMACS." (Meaning "I think you were the last guy to hack on EMACS, and what I am about to say is predicated on this, so please stop me if this isn't true.") "I just need one bit from you" is a polite way of indicating that you intend only a short interruption for a question that can presumably be answered yes or no.

A bit is said to be set if its value is true or 1, and reset or clear if its value is false or 0. One speaks of setting and clearing bits. To {toggle} or invert a bit is to change it, either from 0 to 1 or from 1 to 0. See also {flag}, {trit}, {mode bit}.

The term bit first appeared in print in the computer-science sense in a 1948 paper by information theorist, Claude Shannon, and was there credited to the early computer scientist John Tukey (who also seems to have coined the term software). Tukey records that bit evolved over a lunch table as a handier alternative to bigit or binit, at a conference in the winter of 1943-44.

Related articles by Zemanta

- bit (underpop.online.fr)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=d8c1afa8-4c4e-454c-b02d-87745b2f97b1)

Marcadores: Bit, Computer, Programming, Software

# 5/15/2009 05:31:00 AM, Comentários, Links para esta postagem,

cracking

cracking

[very common] The act of breaking into a computer system; what a {cracker} does. Contrary to widespread myth, this does not usually involve some mysterious leap of hackerly brilliance, but rather persistence and the dogged repetition of a handful of fairly well-known tricks that exploit common weaknesses in the security of target systems. Accordingly, most crackers are incompetent as hackers. This entry used to say 'mediocre', but the spread of {rootkit} and other automated cracking has depressed the average level of skill among crackers.Related articles by Zemanta

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=b1d7ae15-6264-4fca-9a45-b3ecb3236b2a)

Marcadores: Common, Computer, Cracker, Hacking, Rootkit, Security

# 5/14/2009 07:31:00 PM, Comentários, Links para esta postagem,

dongle

dongle

- [now obs.] A security or {copy protection} device for proprietary software consisting of a serialized EPROM and some drivers in a D-25 connector shell, which must be connected to an I/O port of the computer while the program is run. Programs that use a dongle query the port at startup and at programmed intervals thereafter, and terminate if it does not respond with the dongle's programmed validation code. Thus, users can make as many copies of the program as they want but must pay for each dongle. The first sighting of a dongle was in 1984, associated with a software product called PaperClip. The idea was clever, but it was initially a failure, as users disliked tying up a serial port this way. By 1993, dongles would typically pass data through the port and monitor for {magic} codes (and combinations of status lines) with minimal if any interference with devices further down the line -- this innovation was necessary to allow daisy-chained dongles for multiple pieces of software. These devices have become rare as the industry has moved away from copy-protection schemes in general.

- By extension, any physical electronic key or transferable ID required for a program to function. Common variations on this theme have used parallel or even joystick ports. See {dongle-disk}.

- An adaptor cable mating a special edge-type connector on a PCMCIA or on-board Ethernet card to a standard 8p8c Ethernet jack. This usage seems to have surfaced in 1999 and is now dominant. Laptop owners curse these things because they're notoriously easy to lose and the vendors commonly charge extortionate prices for replacements.

[Note: in early 1992, advertising copy from Rainbow Technologies (a manufacturer of dongles) included a claim that the word derived from "Don Gall", allegedly the inventor of the device. The company's receptionist will cheerfully tell you that the story is a myth invented for the ad copy. Nevertheless, I expect it to haunt my life as a lexicographer for at least the next ten years. :-( --ESR]

Marcadores: Code, Common, Computer, Security, Software

# 5/14/2009 02:31:00 PM, Comentários, Links para esta postagem,

flag day

flag day

A software change that is neither forward- nor backward-compatible, and which is costly to make and costly to reverse. "Can we install that without causing a flag day for all users?" This term has nothing to do with the use of the word {flag} to mean a variable that has two values. It came into use when a change was made to the definition of the ASCII character set during the development of {Multics}. The change was scheduled for Flag Day (a U.S. holiday), June 14, 1966.

The change altered the Multics definition of ASCII from the short-lived 1965 version of the ASCII code to the 1967 version (in draft at the time); this moved code points for braces, vertical bar, and circumflex. See also {backward combatability}. The {Great Renaming} was a flag day.

[Most of the changes were made to files stored on {CTSS}, the system used to support Multics development before it became self-hosting.]

[As it happens, the first installation of a commercially-produced computer, a Univac I, took place on Flag Day of 1951 --ESR]

Marcadores: Code, Computer, Developer, Software

# 5/14/2009 09:31:00 AM, Comentários, Links para esta postagem,

grind crank

grind crank

A mythical accessory to a terminal. A crank on the side of a monitor, which when operated makes a zizzing noise and causes the computer to run faster. Usually one does not refer to a grind crank out loud, but merely makes the appropriate gesture and noise. See {grind}.

Historical note: At least one real machine actually had a grind crank -- the R1, a research machine built toward the end of the days of the great vacuum tube computers, in 1959. R1 (also known as `The Rice Institute Computer' (TRIC) and later as `The Rice University Computer' (TRUC)) had a single-step/free-run switch for use when debugging programs. Since single-stepping through a large program was rather tedious, there was also a crank with a cam and gear arrangement that repeatedly pushed the single-step button. This allowed one to `crank' through a lot of code, then slow down to single-step for a bit when you got near the code of interest, poke at some registers using the console typewriter, and then keep on cranking. See http://www.cs.rice.edu/History/R1/.

Marcadores: Code, Computer, Console, Debug

# 5/14/2009 03:31:00 AM, Comentários, Links para esta postagem,

little-endian

little-endian

Describes a computer architecture in which, within a given 16- or 32-bit word, bytes at lower addresses have lower significance (the word is stored `little-end-first'). The {PDP-11} and {VAX} families of computers and Intel microprocessors and a lot of communications and networking hardware are little-endian. See {big-endian}, {middle-endian}, {NUXI problem}. The term is sometimes used to describe the ordering of units other than bytes; most often, bits within a byte.

Marcadores: Computer, Hardware

# 5/13/2009 06:31:00 PM, Comentários, Links para esta postagem,

life

life

- A cellular-automata game invented by John Horton Conway and first introduced publicly by Martin Gardner (Scientific American, October 1970); the game's popularity had to wait a few years for computers on which it could reasonably be played, as it's no fun to simulate the cells by hand. Many hackers pass through a stage of fascination with it, and hackers at various places contributed heavily to the mathematical analysis of this game (most notably Bill Gosper at MIT, who even implemented life in {TECO}!). When a hacker mentions `life', he is much more likely to mean this game than the magazine, the breakfast cereal, or the human state of existence. Many web resources are available starting from the Open Directory page of Life. The Life Lexicon is a good indicator of what makes the game so fascinating.

[glider.png]

A glider, possibly the best known of the quasi-organic phenomena in the Game of Life.

- The opposite of {Usenet}. As in "{Get a life!}"

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_c.png?x-id=a9ea3704-726b-42f9-8cc4-3b606e686e97)

Marcadores: Computer, Games, Newsgroups, Usenet

# 4/30/2009 03:31:00 PM, Comentários, Links para esta postagem,

boot

boot

[techspeak; from `by one's bootstraps'] To load and initialize the operating system on a machine. This usage is no longer jargon (having passed into techspeak) but has given rise to some derivatives that are still jargon.

The derivative reboot implies that the machine hasn't been down for long, or that the boot is a {bounce} (sense 4) intended to clear some state of {wedgitude}. This is sometimes used of human thought processes, as in the following exchange: "You've lost me." "OK, reboot. Here's the theory...."

This term is also found in the variants cold boot (from power-off condition) and warm boot (with the CPU and all devices already powered up, as after a hardware reset or software crash).

Another variant: soft boot, reinitialization of only part of a system, under control of other software still running: "If you're running the {mess-dos} emulator, control-alt-insert will cause a soft-boot of the emulator, while leaving the rest of the system running."

Opposed to this there is hard boot, which connotes hostility towards or frustration with the machine being booted: "I'll have to hard-boot this losing Sun." "I recommend booting it hard." One often hard-boots by performing a {power cycle}.

Historical note: this term derives from bootstrap loader, a short program that was read in from cards or paper tape, or toggled in from the front panel switches. This program was always very short (great efforts were expended on making it short in order to minimize the labor and chance of error involved in toggling it in), but was just smart enough to read in a slightly more complex program (usually from a card or paper tape reader), to which it handed control; this program in turn was smart enough to read the application or operating system from a magnetic tape drive or disk drive. Thus, in successive steps, the computer `pulled itself up by its bootstraps' to a useful operating state. Nowadays the bootstrap is usually found in ROM or EPROM, and reads the first stage in from a fixed location on the disk, called the `boot block'. When this program gains control, it is powerful enough to load the actual OS and hand control over to it.

Marcadores: boot, Bootstrapping, Computer, EPROM, Hardware, Software

# 4/15/2009 11:31:00 PM, Comentários, Links para esta postagem,

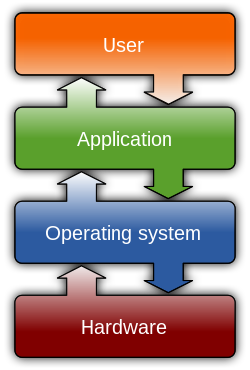

user

user

- Someone doing `real work' with the computer, using it as a means rather than an end. Someone who pays to use a computer. See {real user}.

- A programmer who will believe anything you tell him. One who asks silly questions. [GLS observes: This is slightly unfair. It is true that users ask questions (of necessity). Sometimes they are thoughtful or deep. Very often they are annoying or downright stupid, apparently because the user failed to think for two seconds or look in the documentation before bothering the maintainer.] See {luser}.

- Someone who uses a program from the outside, however skillfully, without getting into the internals of the program. One who reports bugs instead of just going ahead and fixing them.

The general theory behind this term is that there are two classes of people who work with a program: there are implementors (hackers) and {luser}s. The users are looked down on by hackers to some extent because they don't understand the full ramifications of the system in all its glory. (The few users who do are known as real winners.) The term is a relative one: a skilled hacker may be a user with respect to some program he himself does not hack. A LISP hacker might be one who maintains LISP or one who uses LISP (but with the skill of a hacker). A LISP user is one who uses LISP, whether skillfully or not. Thus there is some overlap between the two terms; the subtle distinctions must be resolved by context.

Marcadores: Computer, Hacker, Hacking, Languages, Lisp, Programmer, Programming

# 2/18/2009 02:31:00 AM, Comentários, Links para esta postagem,